The continued growth of Internet of Things (IoT), the rising volume of digital traffic, and the increasing adoption of cloud-based applications are key technology trends that are changing the landscape of data centers. Large or extra-large cloud data centers now house many of the critical applications for enterprise businesses that once resided in their on-premise data centers. Not all applications have shifted to the cloud, however, and the reasons are varied – including regulations, company culture, proprietary applications, and latency – just to name a few.

Why Cloud Computing is Requiring us to Rethink Resiliency at the Edge

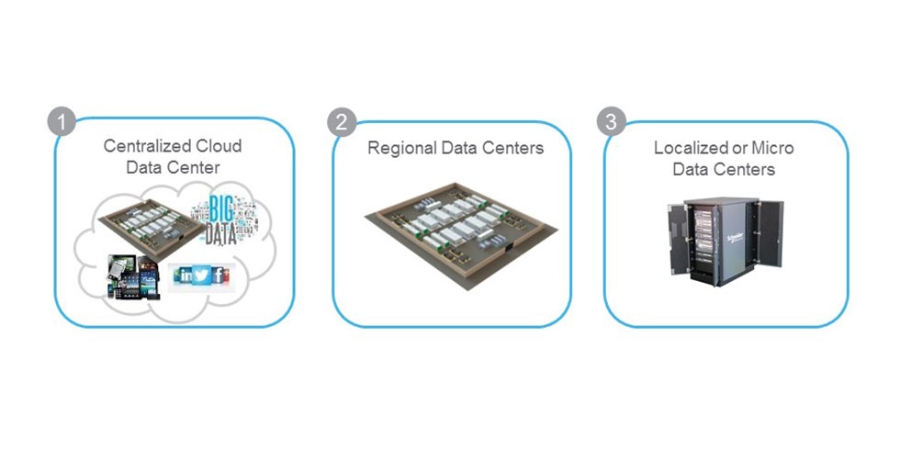

As a result, we’re left with what we refer to in this paper as a “hybrid data center environment”. That is, an environment consisting of a mix of (1) centralized cloud data centers, (2) regional medium to large data centers, and (3) localized, smaller, on-premise data centers (see figure). In this paper, we describe the practices commonly seen in the three data center types mentioned, discuss how the expectations of availability have changed, propose a method for evaluating the required level of resiliency for this hybrid data center environment, and describe best practices for implementing micro data centers on the edge.

Types of data centers

Large multi-megawatt centralized data centers, whether they are part of the cloud or owned by an enterprise, are commonly viewed as highly mission-critical, and as such, are designed with availability in mind. There are proven best practices that have been deployed for years to ensure these data centers do not go down. In addition, these sites are commonly designed and sometimes certified to Uptime Institute’s Tier 3 or Tier 4 standards. Colocation and cloud providers often tout these high availability design attributes as selling points to moving to their data centers.

Regional data centers are closer to the end points (i.e., where data is created and used) and smaller than the large centralized data centers. These data centers exist to bring latency or bandwidth sensitive applications closer to the point-of-use. They are strategically located to address high volume needs. These data centers can be thought of as the “bridge” between central data centers and on-premise localized data centers. Similar to the large centralized data centers, regional data centers are typically designed with security and availability in mind. It is not uncommon to see Tier 3 designs in facilities like this. Sometimes prefabricated design approaches are deployed here, and reference designs are available as a starting point.

A localized data center is one that is co-located with the users of the data center. There are a number of terms used to describe these data centers, including on-premise data center or micro data center. Localized data centers can range in size from 1-2 MW to as little as 10- 20kW. As enterprises outsource more and more of their business applications to cloud or colocation providers, these data centers are trending towards the smaller end of that range, with sometimes only a couple of racks left in a small room or closet. In many of these down-sized data centers today, the design practices often equate to a Tier 1 design, with little thought to redundancy or availability. It is not uncommon to see no security, unorganized racks, no redundancy, no dedicated cooling, and no DCIM. Sites often end up looking like this because as enterprises shift to the cloud or colocation, the few racks that remain are thought of as less important. The focus tends to be on ensuring availability of the bigger data centers. This logic is flawed, however, as often times the remaining racks are equally if not more mission critical.

A more comprehensive availability metric

The tools we use as a data center industry today, focus on how we ensure a single data center is as robust as possible. Tier levels help us design a single site to achieve a particular availability level (number of 9s). Failure is commonly defined as disruption to any IT equipment within a particular data center. The tools and metrics don’t contemplate dependence on multiple data centers, number of users impacted by failure, criticality of business functions impacted, or application (software) fail-over. We believe this is necessary moving forward.

The expectations of employees today vary from those of past generations. As the workforce ages and shifts towards a greater percentage of millennials, there is an expectation that follows. This generation was raised with an “always on, always connected mentality”, where IT devices and systems are expected to work, all the time. Tolerance for disruption in service is low.

If we anticipate this trend continuing, it is crucial we look at more holistic ways of reporting resiliency of data centers, that provide us with the visibility needed to make the right design changes. Think about the utility (power) company, and how they look at availability. They don’t just look at their generation plants and HV lines (their “centralized data center”). They trim back tree branches, maintain pole-mounted transformers, and ultimately measure success based on delivery of power to their customers (their “edge” data centers). The data center industry needs to move to this utility model, where the edge is as important (if not more important) as the centralized data centers.

To compute data center availability from a user’s perspective, we would multiply the availability of the central data center with the availability of the local data center. If for instance, the central data center had an availability of 99.98% (Tier 3 with 1.6 hours of downtime), and the on-premise data center had an availability of 99.67% (Tier 1 with 28.8 hours of downtime), the total downtime from that user’s perspective would be 99.98%*99.67% or 99.65% (30.7 hours of down-time).

If we now take the viewpoint of the CIO, how do I evaluate the impact of my entire eco-system of data centers on business productivity and connectivity? Not every data center is dependent on every other data center being up for employees to function. And not all data centers have the same impact on the business. The number of employees impacted is a factor. Business function is a factor. For instance, a site that serves the function of customer service or manufacturing would likely be more critical than one filled with administrators that could work remotely if their network went down.

We propose that the best approach to assessing all sites holistically is with a scorecard that can aid CIOs and data center managers in identifying the highest priority sites to focus improvements on. The scorecard consists of the availability and associated downtime of every site in the hybrid data center environment (measured ideally), and most importantly a criticality rating for each site, based on the number of people impacted and the function performed. Although a qualitative rating system, this provides a systematic approach to looking at all sites in the business’ data center eco-system. The key is having a consistent method of rating all sites.

With the right availability reporting method in place, it will become apparent where design improvements are necessary to ensure the greatest productivity and business return on investment. In the majority of cases, going through this exercise will demonstrate that the edge data centers, which often have a lower availability, have a higher impact on the business.

Best practices at the edge

The typical design practices at the edge are inadequate given the mission-critical nature of these sites. Improvements should focus on physical security, monitoring (DCIM), redundant power and cooling, and network connectivity.

Prefabricated micro data centers are a simple way to ensure a secure, highly available environment at the edge. Best practices such as redundant UPSs, a secure organized rack, proper cable management and airflow practices, remote monitoring, and dual network connectivity ensure the highest-criticality sites can achieve the availability they require.

For more information on this topic including an example scorecard for assessing resiliency of these hybrid environments, please download White Paper 256, Why Cloud Computing is Requiring us to Rethink Resiliency at the Edge.

Download White Paper 256 Now

Learn more about Edge Data Center solutions

Go back to our top White Papers

Browse the entire White Paper Library